Methods Manual – Part 1: Effectiveness Review Methods

Section Overview

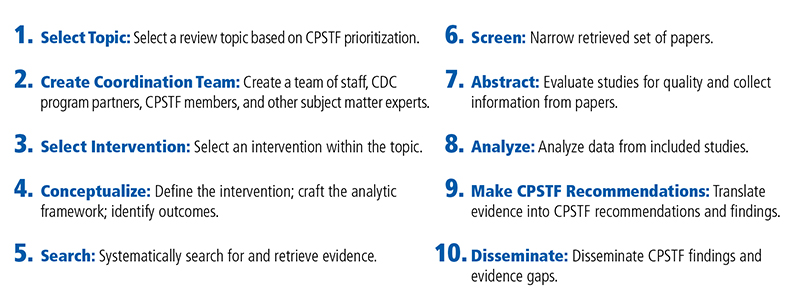

The CGP, with guidance from CPSTF, conducts systematic reviews of interventions using a rigorous ten-step process.

CPSTF decides on the topic for review based on their prioritization process. From there, a coordination team (hereafter called “the team”) is convened to guide the review. The team selects an intervention approach (a type of intervention that is used to address a specific public health problem, such as mass media campaigns to increase safety belt use) within the topic area for review.

Each team follows an extensive conceptualization process in which they draft a definition, inclusion and exclusion criteria, analytic framework, research questions, and applicability factors.

Next, the team consults with a research librarian at the CDC Library to draft a search strategy. The research librarian then conducts the systematic search.

Once candidate publications are obtained from the systematic search, the team begins a three-stage screening process to identify potential papers for inclusion.

The team narrows the search yield through the screening process and abstracts relevant information from the remaining papers using the Community Guide criteria to examine the quality of these papers.

Then, the team analyzes the data, calculating summary effect estimates and assessing applicability.

After completing the analysis, the team presents the findings to CPSTF, who translates evidence into CPSTF recommendations and broadly disseminates the findings to public health practitioners.

On this page

Step 1: Select Priority Topics

Step 2: Convene a Coordination Team

Step 3: Select an Intervention Approach

Step 4: Define the Conceptual Approach

Step 5: Systematically Search the Literature

Step 6: Screen the Studies Identified in the Search

Step 7: Abstract Relevant Information from Selected Studies

Step 8: Analyze the Abstracted Data

Figure 2: Ten Steps in The Community Guide Effectiveness Review ProcessB

Step 1: Select Priority Topics

CPSTF periodically reviews and selects priority topics considered for systematic review. They use a data-driven process (Lansky et al. 2022 [PDF – 174 KB]) to select priority topics, starting with consideration of the Healthy People topics. CPSTF engages partners to provide input on priority issues and topics. For each topic, CPSTF applies several criteria and engages in deliberation and voting to select the set of priority topics.

CPSTF uses a data-driven approach to select priority topics for systematic reviews.

Selection Criteria for Topics

The criteria used by CPSTF to select topics have evolved over time and generally include the following.

- Alignment: The degree to which potential intervention approaches within the topic align with federal or national efforts.

- Balance: The degree to which CPSTF has a balance across public health topics and can fill evidence gaps.

- Burden: The degree to which a topic reflects conditions with high burden or severity.

- Coverage: The ability for CPSTF to develop a robust set of recommendations based on a sufficient body of evidence.

- Disparities: The presence of important health disparities that may be addressed by population health intervention approaches.

- Impact: The degree to which CPSTF findings would be relevant and helpful to the field.

- Preventability: The degree to which population-based interventions could achieve prevention outcomes in this topic.

- Partner interest: The degree to which key partners demonstrate interest in, or priority of, the topic.

CPSTF selected a set of nine priority topics to guide their systematic reviews for the period 2020-2025. The priority topics are as follows:

- Heart Disease and Stroke Prevention

- Injury Prevention

- Mental Health

- Nutrition, Physical Activity, and Obesity

- Preparedness and Response

- Social Determinants of Health

- Substance Use

- Tobacco Use

- Violence Prevention

Step 2: Convene a Coordination Team

CPSTF relies on a coordination team (the team) to direct the conduct of the systematic review. For each intervention review, the team is involved in the entire review process, providing guidance at every step. The team identifies important intervention approaches to consider for systematic reviews; guides development of the intervention definition, relevant research questions, appropriateness of data analysis and communication of results; identifies important evidence gaps and questions for further research; responds to CPSTF requests for additional information or analyses to address CPSTF questions and concerns; and ensures that the final products (e.g., CPSTF findings, peer-reviewed publications, dissemination materials) are useful to the end user.

In Brief

Coordination teams support CPSTF in assessing whether an intervention is effective.

Each team is comprised of 6 10 people, including CPSTF members and Liaisons, scientists, subject matter experts, and other public health experts.

Team members meet regularly to discuss issues involved in conducting the systematic review.

Coordination Team Members

Generally, the team consists of 6-10 people, representing diverse perspectives to cover the multidisciplinary nature of topics reviewed2.

- Community Guide Program staff: Led by a senior scientist, CGP staff conduct the day-to-day work of the team.

- Subject Matter Experts: Researchers and public health practitioners from federal and non-federal agencies, academia, and other organizations provide expertise in the scientific, programmatic, or policy issues related to the topic area of interest.

- CPSTF Member(s): At least one CPSTF member with relevant interests and expertise in the subject matter serves on the coordination team.

- CPSTF Liaison(s): At least one representative from a Liaison organization serves on the coordination team to represent the perspectives of those in the community who would implement CPSTF recommendations.

Step 3: Select an Intervention Approach

The team develops a comprehensive list of intervention approaches addressing a specific priority topic for potential review. CPSTF approves the list of potential reviews. To determine the order in which intervention approaches are reviewed, the team and CPSTF consider factors such as the burden of disease and preventability, feasibility, interest from partners, and availability of resources.

The intervention approaches selected for CPSTF reviews aim to improve population health.

In Brief

Intervention approaches for CPSTF reviews focus on improving public health or decreasing risk of a group.

The team develops a comprehensive list of possible intervention approaches for CPSTF reviews.

Examples of Intervention Approaches

- Services: Team-based care to improve blood pressure control

- Behavioral or social programs: Interventions to reduce risky sexual behavior, HIV, other sexually transmitted infections, and pregnancy among youth

- Environmental or policy: Coordinated built environment approaches combining elements of pedestrian or cycling transportation systems with land use and environmental design features

The team may look at relevant, high quality, existing systematic reviews (ESRs) to inform the selection of an intervention approach and their initial conceptualization of the intervention.

Step 4: Define the Conceptual Approach

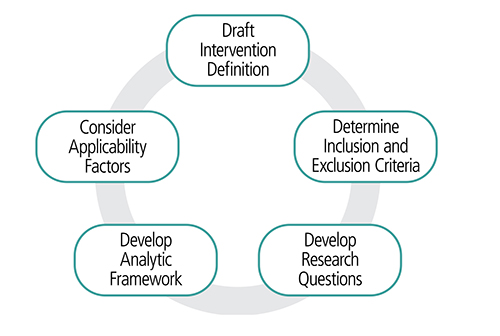

Each team uses a common process that addresses five elements to define the conceptual approach: draft an intervention definition, set inclusion and exclusion criteria, develop research questions and an analytic framework, and consider applicability factors.

The elements may be considered at the same time and the process evolves over the course of the systematic review.

In Brief

Each review uses a conceptualization process that considers five key elements.

The elements may be addressed at the same time and the process may go back-and-forth.

Five Elements of the Conceptual Approach

1. Intervention Definition

The intervention definition is a combination of a definition and a description of the intervention. The intervention definition uses terms common to the field that are easily understood by users.

Components of an Intervention Definition

- Must haves are the essential aspects of the intervention. These directly inform inclusion and exclusion criteria for the review.

- May haves are important, but not-essential intervention characteristics that may vary on how an intervention is implemented.

Figure 3: Conceptualization process characteristics that may affect the intervention of interestC

2. Inclusion and Exclusion Criteria

These criteria determine the intended scope of the review and whether a study belongs in the body of evidence.

- Specific Community Guide criteria that must always be satisfied:

- Study must be conducted in a World Bank-designated high-income country.

- Study must be published in English.

- Other factors to consider include

- Community Guide reviews generally consider all types of comparative study designs (e.g., experimental studies with allocated control groups, observational studies with concurrent or historical control groups, and observational studies with single group before-after comparisons of change).

- Study design exclusions are usually topic, intervention, or outcome specific.

- The PICOS framework (see sidebar) can help focus systematic review inclusion and exclusion criteria.1

PICOS Framework1

Population: Who is the population of interest?

Intervention: What are some must or must-not-have intervention characteristics (this is informed by the “must have” section of the definition)?

Comparison: What or who is being compared to the intervention group to determine effectiveness? Will this be consistent across all the expected studies?

Outcome: What outcomes of interested need to be reported?

Study designs: Which study designs should be included that will allow you to answer your research question?

3. Research Questions

Throughout conceptualization, the team develops and refines research questions.

- Research questions ask if the intervention works. Does the intervention improve study participants’ health outcomes, quality of life, and reduce morbidity and mortality? Through what intermediate steps are these outcomes achieved?

- Additional research questions may address implementation of the intervention. Does intervention effectiveness change based on intervention settings, population or intervention characteristics? Answers to these questions help determine applicability of the intervention across different population groups and settings.

4. Analytic Framework

The analytic framework [PDF – 297 KB] is a graphic display postulating how the intervention works to affect downstream health outcomes.

Components in a Community Guide analytic framework include:

- Intervention under review

- Population of focus for the intervention

- Intermediate outcomes

- Health outcomes or well-established proxy for a health outcome (these are usually recommendation outcomes for Community Guide reviews, the basis of CPSTF recommendations)

- Key potential effect modifiers (e.g. participant or intervention characteristics that may modify the effect of the intervention)

- Additional benefits and potential harms occurring outside the causal pathway of the intervention

5. Applicability Factors

Applicability factors answer the questions of what works when, where, and for whom. These answers help those who are planning to implement interventions based on CPSTF recommendations and findings select what might work in their community.

During the systematic review, teams make a priori hypotheses (described below) for each applicability factor and note considerations for the review. They then collect and assess relevant data to present to CPSTF to generate a formal conclusion on applicability factors.

Sources for Evidence and Considerations

- Body of evidence: The team looks across the body of evidence to assess the differences among study participants, and intervention settings and characteristics to determine whether the intervention is effective across different populations and conditions.

- Individually included studies: The team assesses stratified analysis from each included study based on factors of interest and other factors that were not considered by the authors but determined to be important for the intervention under review.

- Evidence beyond included studies: The team examines the broader literature and considers subject matter expertise from team members and the broader literature to indicate whether the intervention is effective across settings and population groups not examined by included studies.

- For example, if all included studies were conducted in high-income countries outside the United States, can the team expect the review findings to be generally accepted in the United States? If all studies were implemented in urban settings, can the team generalize the findings to rural areas?

Applicability Factors Usually Considered

Community Guide systematic reviews always assess settings (inside or outside the United States), population density (rural, suburban, urban), race and ethnicity, and socioeconomic status indicators (e.g. income, education, employment). The team may include additional applicability factors based on the intervention under review (e.g. intervention characteristics).

Determining a Priori Hypotheses

Once the team has a list of applicability factors for consideration, they look at each factor and determine a priori hypotheses for that factor, based on theory or the team members’ expertise.

Three Possible Hypotheses

- Probably applicable: The team does not expect this factor to influence intervention effectiveness. For example, mobile phone-based interventions to remind patients to take medicine probably work in all high-income countries, including the United States.

- Probably effect modification: This factor likely will influence intervention effectiveness differently for different groups. For example, for diabetes control interventions, the intervention effect will vary based on patients’ baseline blood glucose level. The broader literature suggests that patients with higher baseline blood glucose will experience more reduction in blood glucose because there is more room for improvements.

- Unsure: The team cannot make a decision based on available information. For example, for interventions to increase cancer screening, the team may not know if the intervention will work the same for population groups with different racial backgrounds, and there is not enough information from the broader literature or the team’s subject matter expertise to make an informed decision.

Step 5: Systematically Search the Literature

Prior to conducting the search for evidence, the team will have read existing reviews and other background literature to inform the intervention approach. CGP staff then work with a CDC librarian to determine search terms and which databases to search. Together these form the review’s search strategy.

In Brief

Search strategies are broad enough to capture all relevant evidence and minimize bias.

Sources of Potential Search Terms

- Existing reviews on the intervention approach of interest

- Terms from a sample of studies that fit the intervention definition

- Inclusion criteria

Regularly Searched Databases

- Cochrane

- Embase

- Medline

- PsychINFO

- PubMed

Teams may choose to include grey literature and government reports. These decisions are often dependent on the intervention approach.

Step 6: Screen the Studies Identified in the Search

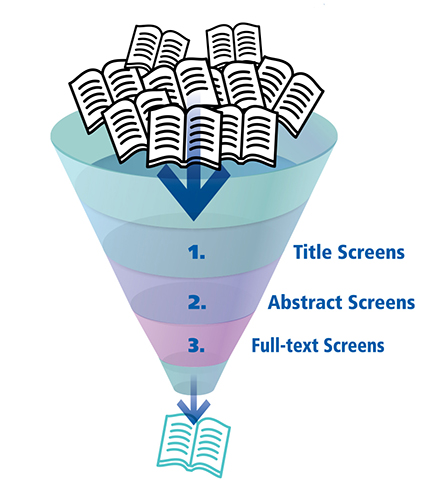

Screening begins once the team obtains the library search results. Community Guide reviews commonly have several thousand references in the search yield. The search is broad to ensure that all relevant studies are captured. The CGP uses a systematic review software management program to help with the screening process.

The team screens based on the inclusion and exclusion criteria in place.

In Brief

Teams use a three-stage screening process to determine which studies are included or excluded during systematic reviews.

Three-Stage Screening Process

Each stage in the screening process is more discriminating than the previous one, resulting in studies with greater relevancy.

- 1. Title screen: Using the search results for individual studies, the team screens each paper by title to quickly eliminate papers that are unrelated to the review. If the title is related to the intervention of interest, the team uses it.

- Requires one screener

- 2. Abstract screen: After passing the title screen, the team screens each paper’s abstract, using more specific criteria (e.g., population or outcome of interest, from high income country, or relevant intervention). Papers not reporting outcomes of interest might pass through this stage if they provide useful information for the review (e.g., background information or benefits and harms of intervention).

- Requires one or two screeners

- 3. Full-text screening: After identifying potentially relevant papers through abstract screening, the team reads the full-text versions of the articles. At this stage, the team has enough information to explore more detailed inclusion criteria (e.g., does the paper evaluate an intervention that fits into the definition). The team may revisit conceptualization (Step 4) and determine whether adjustments are needed (e.g., analytic framework may need to be add another pathway or health outcomes).

- Requires 2 screeners

Figure 4: Three-Stage Screening ProcessD

Each screening stage is discriminating than the previous one, resulting in studies with greater relevancy.

Once screening is complete, the team can create a PRISMA5 flow diagram. The PRISMA flow diagram depicts the flow of information through the different phases of a systematic review. (See example of PRISMA flow diagram.) It maps out the number of papers identified, included and excluded, and the reasons for exclusions. The team will include this diagram in their presentation to CPSTF as well as in the publication of the review.

Did you know? The coordination team may add inclusion criteria to narrow a review. However, removing criteria to broaden the review requires rescreening.

Step 7: Abstract Relevant Information from Selected Studies

Once the team narrows the search yield through the screening process, the team abstracts relevant information to assess the quality of the evidence from each included study. Abstractors record:

- Study design

- Pertinent details of the intervention

- Methods used in the study to evaluate its effectiveness

- Outcomes of interest

- Potential benefits and harms of the intervention

- Information for applicability assessment

In Brief

Teams abstract relevant information to assess the quality of the evidence from each study and calculate summary measures.

Abstraction Process

Each team relies on two independent abstractors to ensure that abstraction is comprehensive and accurate. To avoid undue influence, abstractors independently read the study and collect relevant information. Then, abstractors meet to discuss and reconcile differences. If differences persist, abstractors will present the issue to the full coordination team. Each abstractor uses a detailed evidence table to collect the data from studies. CGP staff use a standardized form that is modified for each review to collect the relevant data determined through the conceptualization of the intervention approach (Step 4). Because the form is standardized, bias in collecting data is minimized. (See example detailed evidence table template).

These abstracted data are further summarized into a summary evidence table in which the most relevant details of the intervention and its estimated effects are recorded for each study (See example summary evidence table [PDF – 503 KB]).

Assess Study Quality and Assign Quality of Execution

The abstraction form includes a quality of execution assessment framework to address threats to internal and external validity. This tool is divided into six domains and includes nine possible limitations for each study.4 (See “Quality of Execution Assessment Framework” below). Instructions are included in the abstraction form to provide explicit decision rules and examples of how to answer the question in various circumstances.4

A study is deemed to have good quality of execution if there was zero to one limitation, fair if two to four limitations, and limited if more than four limitations. Studies with limited quality of execution are excluded from the remainder of the review.4

| Domain | Potential Reasons for Limitations | Maximum # of Limitations Given for Each Domain |

|---|---|---|

| Description | Was the study population well described? Was the intervention well described? What was done? When was it done? How was it done? Where was it done? How was it targeted to the study population? |

1 |

| Sampling | Was the sampling frame or universe adequately described? Were the inclusion and exclusion criteria clearly specified? Was the unit of analysis the entire eligible population or a probability sample at the point of observation? |

1 |

| Measurement | Were outcome measures valid and reliable? Was exposure to the intervention assessed? If yes, were these exposure measures valid and reliable? |

2 |

| Data Analysis | Appropriate statistical testing conducted? Reporting of analytic methods and tests? Appropriate controlling for design/outcome/population factors? Other issues with data analysis |

1 |

| Interpretation of Results | >80% completion rate? Data set complete? Study groups comparable at baseline? If not, was confounding controlled before examination of intervention effectiveness? Biases that might influence the interpretation of results including other events/interventions that might have occurred at the same time |

3 |

| Other | Other biases or concerns not included in the previous domains (e.g., evidence of selective reporting) | 1 |

Assessing Suitability of Study Design and Quality of Execution

The team assesses the strength and utility of the study design in evaluating effectiveness of the intervention.2, 4

Categories of Suitability

- Greatest: randomized controlled trial, non-randomized trial, prospective cohort, other design with concurrent comparison

- Moderate: interrupted time series, retrospective cohort, case-control

- Least: uncontrolled before-after, cross-sectional

The team combines suitability of design with quality of execution to assess each review’s body of evidence. (See example of body of evidence table.)

Step 8: Analyze the Abstracted Data

After abstracting quality of execution and intervention details, the team identifies included studies that have evidence for the outcomes of interest. The team then organizes evidence for each outcome of interest separately and decides how best to organize data for analysis and display. The analytic framework helps the team organize evidence for outcomes of interest. In addition, they consider applicability and identify implementation considerations and evidence gaps.

In Brief

The analytic framework guides organization of data for analysis

During the analysis step, teams consider the analytic framework, applicability, impact, potential benefits and harms, implementation factors, and evidence gaps.

Analytic Principles

- Community Guide systematic reviews consider evidence for each recommendation outcome in the analytic framework, including behavior, health, morbidity, and mortality outcomes. (See example Analytic Framework [PDF – 297 KB].)

- The team documents the linkage between the intervention and behavior and clinical changes to recommendation outcomes (health or health-related outcomes)

- Evidence from included studies are used to:

- Group and report studies by recommendation outcome,

- Summarize or tabulate study information by evaluating similarities and differences of the included studies (e.g. organizing by study design), and

- Translate data into common effect estimate and convert, if needed, into similar descriptive or statistical formats.

- The preferred method of combining data is to use the raw data provided in included studies to calculate absolute or relative change. Other times, the team may make use of adjusted measurements.

- When studies provide similar outcome measurements that cannot be combined, these are assessed narratively.

- Assess patterns and relationships across and within studies for each recommendation outcome.

Analysis Methods

- Using descriptive statistics (e.g., median, interquartile range), to report population characteristics (e.g. race or ethnicity), intervention characteristics (e.g. intervention components, duration), and study characteristics (e.g. sample size).

- Study, intervention, setting, and populations characteristics are also used to identify the potential subset of evidence to consider for effect modification and applicability analysis.

- Using narrative synthesis and descriptive statistics to report results from recommendation outcomes.

- Grouping studies by study design (e.g. analyzing studies of greatest suitability of design together) and generating summary effect estimates (absolute or relative change). (See example of study effect estimates display.).

- Depicting results visually by using scatterplots, graphs, and tabular displays.

- When there are three or more studies, median and interquartile interval are calculated.

- When it is not possible to combine studies for a summary effect estimate or when less than three studies report an outcome, narrative results are reported in tables.

- Performing subgroup analysis to identify effect modifiers and compare subgroup analysis of interest with the overall summary results.

Applicability Analysis

Once the team has determined applicability factors and a priori hypotheses, they can collect and analyze data for all applicability factors. With the data collected from the studies, combined with information gathered from the broader literature and team members, the team can accept or reject a priori hypotheses.

Applicability considerations include

- Examining differences in intervention characteristics, such as intervention intensity, as well as differences in study population and other key potential modifiers. (See applicability factors.)

- Analyzing a subset of studies that report on a particular factor of interest and can also highlight important limitations. For example, when considering age, the evidence might suggest the intervention is effective for one age group and not another, or there may be too few studies to determine the intervention’s applicability to different age groups.

- Assessing the broader literature and expertise from the coordination team.

Applicability conclusions

- If factors did not influence intervention effectiveness, then review findings are applicable across the factors examined.

- If factors did influence intervention effectiveness, then there are differential review findings based on these factors.

Assessing Meaningful Impact

To assess the public health impact of the intervention, CPSTF considers evidence documented by

- Included studies in the systematic review

- Studies outside of the systematic review

- Other Community Guide systematic reviews

- Consistency of results: Do most studies demonstrate an effect in the direction that favors the intervention for recommendation outcomes?

- Magnitude of results: Is the effect demonstrated across the body of evidence meaningful in a public health or population context. Most systematic reviews by the Community Guide will include shorter term (e.g., fruit and vegetable consumption measures of change in outcomes) but do not include longer-term (e.g., progression to obesity or development of heart disease or cancer) population attributable effects on health. CPSTF will use the results from a review on upstream outcomes with known links to downstream outcomes (e.g., adequate fruit and vegetable intake is linked to decreased adiposity7 and improved weight management, reduced risk of heart disease and some cancers8).

Step 9: CPSTF Makes Recommendations and Findings

CGP staff present results of systematic reviews, on behalf of the coordination team, to CPSTF for their deliberation and decisions about recommendations and findings. CPSTF members review and discuss the evidence, consider input from partners, and issue a recommendation or finding based on the strength and consistency of the effectiveness evidence.

In Brief

CPSTF reviews and discusses evidence presented by CGP staff.

CPSTF recommendations and findings are based on the meaningfulness and consistency of effectiveness evidence.

From Evidence to Recommendations and Findings

Primary Consideration for Reaching a CPSTF Recommendation

- Body of evidence with an adequate number of good or fair quality of execution studies with greatest, moderate, or least study design suitability

- Intervention effectiveness (are results consistent and meaningful?)

Additional Factors Considered for CPSTF Finding and Recommendation Statement

- Applicability (intervention works when, where, and for whom?)

- Additional benefits and potential harms (do harms outweigh intervention benefits)? see example below

Categories of CPSTF Recommendations and Findings

- Recommend, with strong or sufficient evidence

- Recommend against, with strong or sufficient evidence when the harms are greater than the benefits

- Insufficient evidence, when there is not enough evidence to determine intervention effectiveness or inconsistent evidence. It does not mean that the intervention doesn’t work, but rather that we can’t tell yet if it works.

Information on Additional Benefits and Potential Harms

- Additional benefits may result from exposure to the intervention. For instance, a school-based health center might focus on improving students’ health as well as enabling parents to lessen needed time-off from work for their children’s doctor’s visits.6 (Learn more here [PDF – 617 KB]).

- Potential harms may result when, despite improvements in other outcomes, an intervention may also have unintentional, harmful consequences. For example, an intervention of school dismissal to reduce pandemic flu transmission may reduce the likelihood of flu spreading, but it may also lead to an increased cost in childcare for parents. (Learn more here [PDF – 559 KB]).

- Included studies might address these points in additional benefits or potential harms, or they may be identified in the broader literature or team discussions.

CPSTF Evidence Decision Table

During the presentation to CPSTF, teams share the CPSTF evidence decision table. The decision table displays evidence of an intervention’s effectiveness based on the suitability of study design and quality of execution of the body of evidence and consistency of the results and meaningfulness of the effect. CPSTF may consider options for modifying findings and conclusions of the review, such as upgrading or downgrading the strength of evidence.

For example, CPSTF may decide to

- Upgrade the strength of evidence from sufficient to strong, based on a large magnitude of effect

- Downgrade the strength of evidence from strong to sufficient, based on concerns about the evidence or results

- Narrow the recommendation, based on differences of effectiveness across the body of evidence

- Downgrade a finding to Insufficient evidence, based on serious concerns about the evidence or results

- Downgrade a finding of Recommend for to Recommend against, if evidence of an important harm is established

Considerations for Implementation

- Implementation considerations offer guidance on what others should be aware of when attempting to implement the intervention under review. This includes suggesting ways to best facilitate implementation, such as

- Identifying any potential barriers or challenges and informing both practice and research in public health

- Identifying implementation resources from CDC and other sources that can be used to implement CPSTF recommended interventions and programs

Evidence Gaps

- After determining the effectiveness and applicability of an intervention, the team highlights any evidence gaps that have emerged during the review process. These gaps may be identified in the literature, by the team, or from the applicability assessment.

- Common evidence gap questions include:

- Will the intervention work everywhere for everyone?

- How should programs be structured or delivered to ensure effectiveness?

- When a review receives an insufficient evidence finding, the team outline gaps in the effectiveness evidence and may also include specific challenges.

Task Force Finding and Rationale Statement

After CPSTF makes its decision regarding the finding statement using the Evidence Decision Table, the team develops a Task Force Finding and Rationale Statement (TFFRS) for each intervention. TFFRS are divided into multiple parts, including the

- Intervention definition

- Rationale, which includes:

- Description of the body of evidence

- Applicability issues

- Data quality concerns

- Potential benefits and harms

- Evidence gaps

- Considerations for implementation that should be considered by the public health community

Once approved, the TFFRS is disseminated to the public health community primarily through the Community Guide website as described in Step 10.

Step 10: Disseminate CPSTF Findings

After CPSTF issues a finding statement, the Community Guide Program establishes a dissemination team of CGP staff, CPSTF members, CDC program partners, CPSTF Liaisons, and external partners who work in areas related to the review.

The dissemination team develops web products that are posted for every review, such as an intervention summary and the TFFRS. Also, staff develop a one-page, plain language, formatted summary of the review that can be viewed online or downloaded and shared with colleagues or partners. Once these products are available, the team sends an email notice to subscribers interested in news or that particular topic area. The team also develops a home page feature on the Community Guide website, email updates, and tweets from @CPSTF.

In Brief

The dissemination team is made up of CGP staff, CDC program partners, CPSTF members and Liaisons, and other partners. This team collaborates to disseminate reviews’ findings through multiple channels.

Dissemination Channels

- The Community Guide website (www.thecommunityguide.org): The site includes all CPSTF recommendations and findings along with the effectiveness and economic evidence on which they are based. The site also has resources to help implement CPSTF recommendations, Community Guide in Action success stories.

- Email: Subscribers select topic areas of interest and receive relevant notices about CPSTF recommendations and findings, upcoming CPSTF meetings, and new products.

- Social Media: @CPSTF regularly updates Twitter followers with news about CPSTF recommendations and resources.

- Presentations: CPSTF evidence reviews and findings are shared during scientific meetings and conferences, invited talks, and webinar presentations.

Expanding Reach and Impact on Public Health through Partnerships

- Partners: CDC program partners and Liaisons to CPSTF use their own channels, such as newsletter articles, social media posts, and websites to disseminate information about CPSTF recommendations.

- Peer-reviewed journals: Recommendation statements and evidence summaries are published in the American Journal of Preventive Medicine, the Journal of Public Health Management and Practice, and other peer-reviewed journals.

The CGP staff support CDC programs and other partners by developing and disseminating tools and resources to help practitioners implement CPSTF findings. (For more information, contact communityguide@cdc.gov.)

Printer Friendly Version

Printer friendly version of this portion of the methods manual [PDF – 460 KB]